If you have spent much time working with SQL Server, then you know it can be frustrating to figure out exactly how much RAM is being consumed at a given moment. Windows task manager makes it look like SQL Server is using everything you give it, so how do you know if it is really enough? For system administrators, making sure the ERP database has the right amount of RAM is important. You have to make sure there is a healthy balance between storage and RAM. In this post, we are going to explore some basic concepts of right sizing RAM on the ERP database. These are geared toward the SMB space, and this is not a guide for high end, large enterprise, heavy load applications. You will need to do much more than this to get everything right. These are just the basics.

I have seen forums that profess to give SQL Server as much RAM as possible. This seems a little over the top, and not based on metrics or data. Epicor also publishes their system recommendations for hardware, and I have used them with success. That said, I am always curious to learn and in this case, to make sure that the ERP database is getting the right diet of compute resources, not too much and not to little.

On the Subject of ERP Database Metrics

Recently, I went through a right sizing exercise on a 200 user Prophet 21 environment. This environment is on version 2017.1, build 2950 as of the time of this publication. Users access the ERP system via a series of 5 remote desktop hosts. Additionally, there is heavy use of SQL Reporting Services, Crystal Reports, and a PHP based web application that provides business intelligence services. Epicor’s system recommendations were to dedicate 8 CPUs and 128Gb of RAM, or half the database size, whichever is larger. I wanted to test the theory with real performance metrics.

There are as many opinions on how to go about right sizing as there are people. I am not dealing with millions of transactions per day on an SQL server with tons of CPUs or a huge amount of RAM. My company is running a typical ERP database for a small to medium enterprise, there is not a justification for having a full-time DBA on staff. I needed something fairly simple to understand. I settled on looking at 3 things.

1) Determining the Database Size

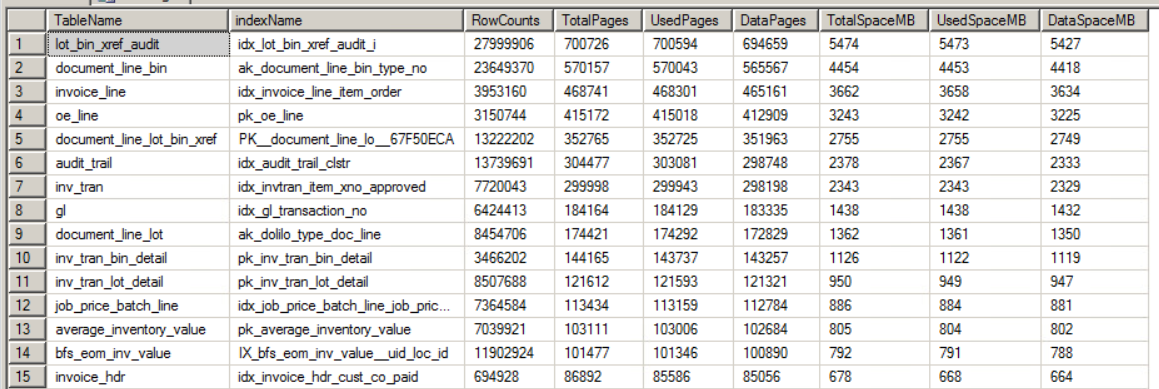

Unfortunately for me, whomever initialized our ERP database gave it an initial size of 100GB. That stinks for a lot of reasons, but for this case, it obscures the actual size of the database. To overcome this problem, I used this query to determine the actual size of each table in the ERP database. From there, I added up the total size in megabytes of all objects, and determined that my ERP database is approximately 47Gb in size.

2) How Long does Data Live in RAM?

Knowing that I am under 50Gb in total data, the next step is to determine how long data is expected to live in RAM. For this, we will track a database statistic called Page Life Expectancy. Put simply, PLE is how long in seconds a page will live if left alone. As more of the memory is actively consumed, the PLE will drop, since the database is going back and forth to disk. To track this number over time, I run the following as an agent job every 10 minutes.

Code Example

INSERT temp_PLE_tracker

SELECT

[object_name],

[counter_name],

[cntr_value],

GETDATE()

FROM

sys.dm_os_performance_counters

WHERE

[object_name] LIKE '%Manager%'

AND [counter_name] = 'Page life expectancy'

There are many theories on what a good PLE value to stay above is. Microsoft used to recommend 300 seconds, but this was developed when server RAM values were in the 2 to 8GB range. This is hardly a useful comparison now. At this point I have been using a rough estimate of 300 seconds per 4Gb of RAM. So on a 64Gb server, I am looking for a PLE of around 4,800 seconds.

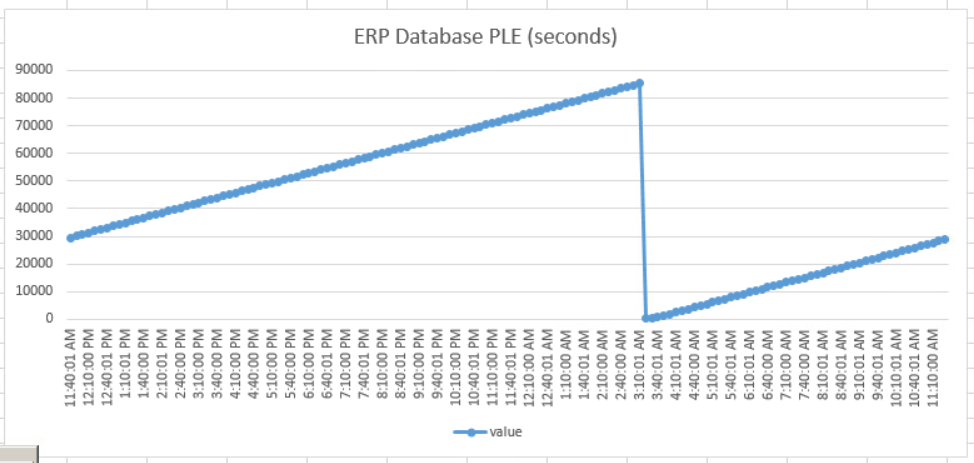

After collecting PLE data for a few days, I can graph the results in Excel to understand what is happening on my ERP database. The graph below is for a typical 24 hour period. It shows a steady climb in PLE until about 3:00AM when it drops dramatically. This huge drop is not a worry. The nightly database backup occurs at 3:05am and since it is disk intensive, I would expect a sharp drop. Once the backup ends, the PLE starts climbing right away. This tells me that the pages are expected to live for as much as 85,000 seconds (23.6 hours). In other words, there is not a lot of risk that I will be hitting the disks all of the time. I am well above my PLE target of 4800, meaning I could likely reduce RAM on the server without a lot of risk.

3) How Often is Windows Hitting the Paging File?

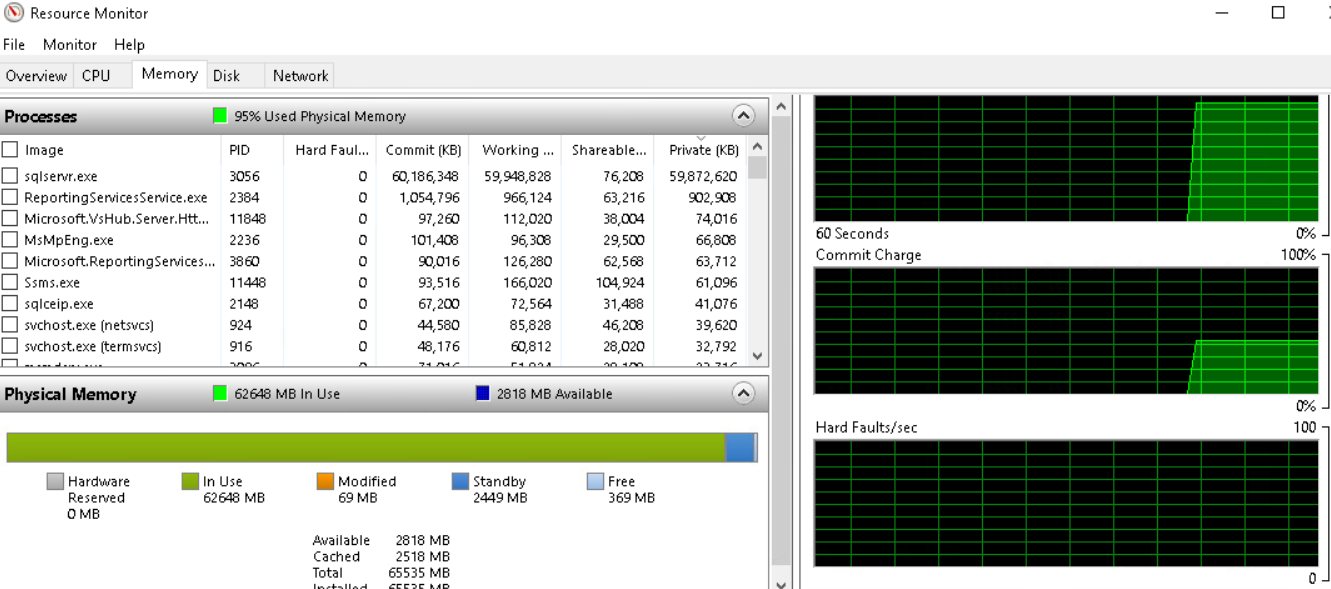

The last thing I wanted to look at was how often the Windows Server OS is hitting the paging file. A real time view of this can be found in the Windows Resource Monitory, and it is called Hard Faults/sec. When you are seeing a lot of hard faults per second, that is an indication that the the system may be running low on memory, or the cache size needs to be adjusted. Remember that you cannot just look at memory on the SQL Instance, you also have to give the operating system some room to operate too.

The goal is not to attain zero hard faults. The goal is to make sure you are not pegging the scale all of the time, thus slowing down overall system performance.

So How Much RAM Already?

In my environment, all of our servers are virtual. We use VMware as a hypervisor. While I am happy to over-commit CPU cores, I am not a big fan of over-commitment with RAM. Usually, this means that RAM is my most valuable resource. As I mentioned earlier, our ERP database server was running 128Gb of RAM, per the ERP vendor’s recommendations. After monitoring and analyzing performance for a week under full load, we decided to cut the RAM down to 64Gb. SQL Server is limited to a max of 58Gb and the rest was left available to the server OS and overhead.

After making this change, we saw no drops in performance. There were no increased in hard faults per second. Additionally, there was no drop in SQL Server Page Life Expectancy. This right size was done to make room for a new ERP API server on the host, and RAM was getting a little scarce. At this point, I have no reason to reduce it further, since there is no pressure on our resources. Having said that, if I were lacking RAM on the host, I could absolutely see testing this again with 48Gb of RAM on the ERP database server.

It would be easy to check the PLE impact, I could simply cut the max available RAM for SQL Server and see what happens. If that went well, reducing overall server RAM would follow. Then it is just a matter of monitoring results.

Remember, These are Basics

These tips are not the end all, be all of RAM right sizing. They are a basic set of tools you can use to make initial adjustments up or down. When you are really trying to fine tune resources, there are more considerations you need to look at. I recommend this presentation as a good place to research other factors you should consider when doing right sizing.

Read more about ERP administration.